|

Projects Publications Resume Contact About Youtube |

|

| perf.c | perf.rs | perf.swift |

#include < stdio.h >

#include < stdlib.h >

int main(int argc, char **argv) {

int element = 0;

int iteration = 0;

int iterations = 0;

int innerloop = 0;

double sum = 0.0;

int array_length = 100000000;

double *array = (double*)malloc(array_length * sizeof(double));

if (argc > 1)

iterations = atoi(argv[1]);

printf("iterations %d\n", iterations);

for (element = 0; element < array_length; element++)

array[element] = element;

for (iteration = 0; iteration < iterations; iteration++)

for (innerloop = 0; innerloop < 1000000000; innerloop++)

sum += array[(iteration + innerloop) % array_length];

printf("sum %f\n", sum);

free(array);

array = NULL;

return 0;

}

|

use std::env;

fn main() {

let iterations: usize;

let mut sum: f64 = 0.0;

let array_length: usize = 100000000;

let args: Vec< String > = env::args().collect();

let mut array: Vec< f64 > = vec![0.0; array_length];

iterations = (&args[1]).parse().expect("Not a number");

println!("iterations {}", iterations);

for element in 0..array_length {

array[element] = element as f64;

}

for iteration in 0..iterations {

for innerloop in 0..1000000000 {

sum += array[(iteration + innerloop) % array_length];

}

}

println!("sum {}", sum);

}

|

import Swift

var element = 0

var iteration = 0

var iterations = 0

var innerloop = 0

var sum = 0.0

let array_length = 100000000

var array: [Double] = Array(repeating: 0.0, count: array_length)

iterations = Int(CommandLine.arguments[1]) ?? 0

print("iterations \(iterations)")

for element in 0...array_length-1 {

array[element] = Double(element)

}

for iteration in 0...iterations-1 {

for innerloop in 0...1000000000-1 {

sum += array[(iteration + innerloop) % array_length]

}

}

print("sum \(sum)")

|

| mem.c | mem.rs | mem.swift |

#include < stdio.h >

#include < stdlib.h >

#include < unistd.h >

typedef struct {

int data1;

int data2;

} my_data_t;

void allocate(void)

{

my_data_t *my_data = NULL;

my_data_t **array = NULL;

int element = 0;

int list_size = 10000000;

double sum = 0.0;

for (element = 0; element < list_size; element++) {

my_data = (my_data_t*)malloc(sizeof(my_data_t));

my_data->data1 = element;

my_data->data2 = element;

array = (my_data_t**)realloc(array, sizeof(my_data_t*)*(element+1));

array[element] = my_data;

}

for (element = 0; element < list_size; element++) {

my_data = array[element];

sum += my_data->data1;

sum += my_data->data2;

free(my_data);

}

free(array);

#ifdef USE_TRIM

malloc_trim(0);

#endif

printf("sum %E\n", sum);

}

void waitsec(int sec)

{

int i;

for (i = 0; i < sec; i++)

sleep(1);

}

int main(int argc, char **argv)

{

waitsec(10);

allocate();

waitsec(600);

allocate();

allocate();

waitsec(600);

return 0;

}

|

use std::thread::sleep;

struct my_data_t {

data1: usize,

data2: usize,

}

fn allocate() {

let list_size: usize = 10000000;

let mut array: Vec< my_data_t > = Vec::new();

let mut sum: f64 = 0.0;

for element in 0..list_size {

let mut my_data = my_data_t {data1: 0, data2: 0};

my_data.data1 = element;

my_data.data2 = element;

array.push(my_data);

}

for element in 0..list_size {

let my_data = &array[element];

sum += my_data.data1 as f64;

sum += my_data.data2 as f64;

}

println!("sum {}", sum);

}

fn waitsec(sec: u32) {

for _ in 0..sec {

sleep(std::time::Duration::from_secs(1));

}

}

fn main() {

waitsec(10);

allocate();

waitsec(600);

allocate();

allocate();

waitsec(600);

}

|

import Swift

import Foundation

struct my_data_t {

var data1: Int = 0

var data2: Int = 0

}

func allocate() {

let list_size = 10000000

var array: [my_data_t] = Array()

var sum = 0.0

for element in 0...list_size-1 {

var my_data = my_data_t()

my_data.data1 = element

my_data.data2 = element

array.append(my_data)

}

for element in 0...list_size-1 {

let my_data = array[element]

sum += Double(my_data.data1)

sum += Double(my_data.data2)

}

print("sum \(sum)")

}

func waitsec(sec: Int) {

for _ in 0...sec-1 {

sleep(1);

}

}

waitsec(sec: 10)

allocate()

waitsec(sec: 600)

allocate()

allocate()

waitsec(sec: 600)

|

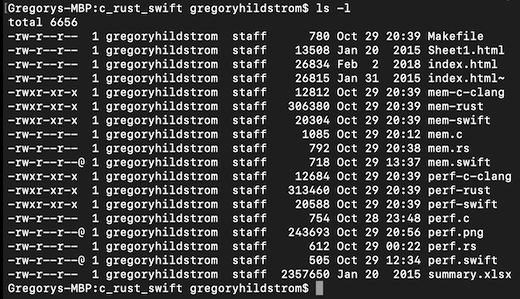

all: \ perf-c-clang \ perf-rust \ perf-swift \ mem-c-clang \ mem-rust \ mem-swift perf-c-clang: perf.c clang -O3 -o perf-c-clang perf.c perf-rust: perf.rs rustc -C opt-level=3 -o perf-rust perf.rs perf-swift: perf.swift swiftc -O -o perf-swift -emit-executable perf.swift mem-c-clang: mem.c clang -O3 -o mem-c-clang mem.c mem-rust: mem.rs rustc -C opt-level=3 -o mem-rust mem.rs mem-swift: mem.swift swiftc -O -o mem-swift -emit-executable mem.swift clean: rm -f *.o *.so rm -f perf-c-clang rm -f perf-rust rm -f perf-swift rm -f mem-c-clang rm -f mem-rust rm -f mem-swift run_perf_test: all echo "-------------------------------------" time -p ./perf-c-clang 100 time -p ./perf-rust 100 time -p ./perf-swift 100 echo "-------------------------------------" run_mem_test: all echo "-------------------------------------" ./capture.sh mem-c-clang & ./mem-c-clang ; sleep 2 ./capture.sh mem-rust & ./mem-rust ; sleep 2 ./capture.sh mem-swift & ./mem-swift ; sleep 2 echo "-------------------------------------"